Hey guys, have you ever dreamed of having your own little ChatGPT on the computer, where your data really belongs only to you?

Then put on your seatbelts, because LM Studio (Stand 25.9 in version 0.3.27) is here to make this dream come true for Linux, Mac and Windows! This super cool desktop app makes it easy to run large language models (LLMs) like Llama, Phi or Gemma locally on your computer. No cloud subscription, no data stealing, just pure AI power that you control yourself. And for all those who have so far made a big arc around Huggingface and the command line directly all-clear: It has an easy-to-understand clear GUI.

I'll guide you through the setup and show you how to unleash your own AI magic in no time at all. Whether you're writing, coding, or just curious, LM Studio He is a real game changer. Let's get started and let some AI sparks fly!

What is LM Studio? Your local AI adventure

Stand up LM Studio as your personal AI hub. It's an app that runs on Windows, Mac, and Linux and runs open source LLMs directly from Hugging face Put it on your computer. You can browse, download, and chat with models without the need for an internet connection or expensive cloud services.

LM Studio does all the technical work for you. It loads the models into memory and provides an intuitive interface reminiscent of ChatGPT. So you can fully focus on asking questions or creating cool stuff. The best thing about it? Your data never leaves your computer.

Install LM Studio: Easier than an IKEA shelf

The installation of LM Studio It's really a no-brainer. I promise!

- Hardware check:

Your computer needs some power, but don't worry, it doesn't have to be a supercomputer.

- RAM: At least 16 GB is ideal for most models (8 GB is enough for smaller models).

- memory: Models are 2 to 20 GB in size. Make room on your hard drive.

- CPU/GPU: A modern CPU is enough, but a GPU (NVIDIA/AMD) makes everything faster. Mac users with M1/M2/M3 chips have a clear advantage here, as their integrated GPU works extremely well. Newer NVIDIA GPUs with CUDA support (RTX up) as well.

- RAM: At least 16 GB is ideal for most models (8 GB is enough for smaller models).

- Download & Installation:

- Goes up lmstudio.ai and download the installer for your operating system. Execute it and follow the instructions. It is a classic ‘Next, Next, Done’ setup. Starts LM Studio. You will land on the home page with a search bar and a selection of models. If you do not see the grey inconspicuous ‘Skip’ button, a first model will be loaded directly in the background.

That was it! For me, it took less than five minutes.

Using LM Studio: Discover and chat models

Now, where LM Studio Running, the fun can begin!

- Select and download model:

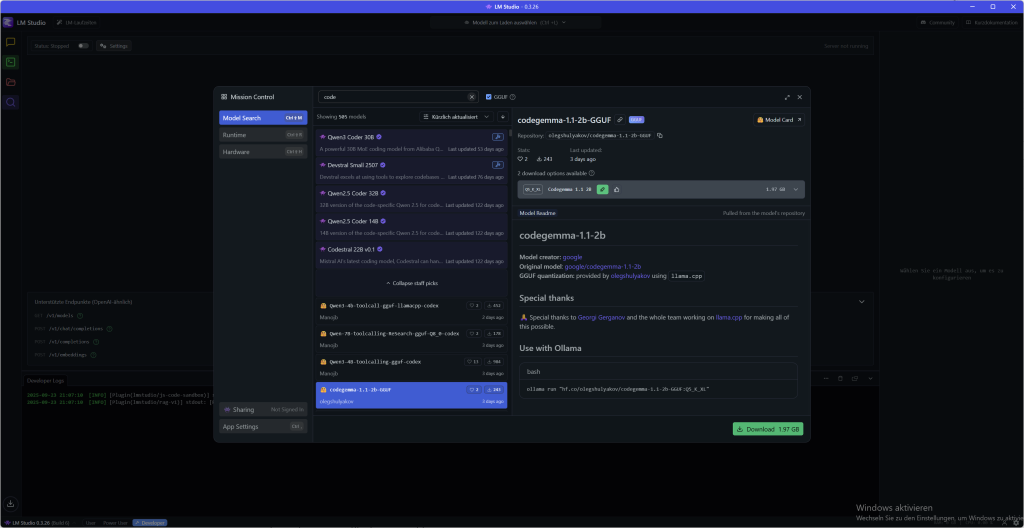

- Click on the magnifying glass icon (‘Discover’) in the left sidebar. This is your model candy store.

- Look for a model that interests you, such as: Llama 3.1 8B or Mistral-7B-Instruct.

- For beginners, I recommend a quantized model (e.g. a Q4_K_M version) with 4 to 8 GB. They are fast and efficient.

- Just click on "Download" next to the model. The progress is displayed in the Downloads tab.

- Click on the magnifying glass icon (‘Discover’) in the left sidebar. This is your model candy store.

- Loading and Chatting:

- Once the download is complete, go to the folder icon (‘My Models’).

- Click on the downloaded model and then click on "Load". The model is now loaded into memory.

- Switch to the speech bubble icon (‘Chat’) and select your loaded model from the drop-down menu.

- Now you can get started! Ask the AI for a joke, ask them to write a poem, or let them explain a complex concept to you.

- Once the download is complete, go to the folder icon (‘My Models’).

The chat feels exactly like an online service, but the entire conversation takes place offline. Your words remain private and there is no limit and no extra cost for a subscription.

Download LLMs: Discover and select models

LM Studio has its own model downloader that works directly with Hugging face connected. Here's how to find the perfect model for you:

- Search and find: Go to the ‘Discover’ tab (the magnifying glass) or simply press ⁇ + 2 (Mac) and Ctrl + 2 (Windows/Linux). You can search by keywords such as ‘llama’, ‘gemma’ or ‘lmstudio’. You can even add complete Hugging Face URLs to the search bar!

- Quality vs. size: For each model, there are often several download options that sound cryptic, such as Q3_K_S or Q_8. This Q stands for quantization, A technique that compresses models. As a result, they become smaller and run faster, but lose a bit of quality. For starters, choose a 4-bit option or higher that offer a good compromise. Details can be found here in this article Read it.

Simply select the desired model and click on ‘Download’. The progress is displayed in the Downloads tab. By the way, if the default location does not fit, you can change the location of your models in the My Models tab.

The interface: From beginner to professional user

LM Studio It's not just a tool, it adapts to you. Whether you're just getting started or you're already a real AI crack, the UI offers three different modes in the footer at the bottom left, which you can easily switch. So you always have only the functions in view that you really need.

The modes at a glance:

- User This mode is perfect for everyone beginner. It only shows the chat interface and automatically configures everything in the background. You don't have to worry about anything here and you can get started right away. Simply load model and chat, it's not easier.

- Power user If you want more control, this is your mode. Here you have access to all Loading and Inference Parameters and advanced chat functions. You can fine-tune your conversations and, for example, edit messages afterwards or reinsert parts of the conversation. This is ideal if you want to delve deeper into the matter without being slain by too many options. The gear for the power user settings is located in the middle of the top, to the left of the display of the loaded model.

- developer For those who want everything! This mode really turns all functions free, including special developer options and all keyboard shortcuts. Here you can find every single setting of LM Studio adapt and tailor the AI exactly to your needs. If you work with the API or the command line, you'll love this mode.

You can easily select your mode at the bottom of the app. Starts as User, And when you feel more secure, just switch to the Power user or developerMode of operation. This is how it grows LM Studio with your abilities.

Chat with documents: RAG for your local files

An absolute highlight of LM Studio is the ability to chat with your own documents – completely offline! You can simply .docx-, .pdf– or .txtAttach files to your chat session and give the AI extra context.

How does it work?

- RAG (Retrieval-Augmented Generation): If your document is very long, apply LM Studio It's a clever technique called RAG. The app searches your file for the most relevant information that fits your question and only feeds these ‘found’ snippets to the LLM. This is like a personal librarian who only picks out the important passages for you.

- Full context: If your document is short enough (i.e. it fits into the ‘memory’ of the model, also context called), adds LM Studio Just add all the content. This works great with newer models like Llama 3.1 or Mistral Nemo, which support longer contexts.

Tip for successful RAG: The more accurate your question is, the better. When you ask for terms, ideas, or specific words that appear in the document, you increase the chance that the system will find exactly the right information and pass it on to the model.

Helpful tips and tricks

Modification of the output

LM Studio has a particularly interesting function for the subsequent processing of issues of your prompts: You can easily change the output of the LLM itself with the Edit function. You can find it under the output as a pen symbol. Changes that you enter there will affect your further chat history, the LLM remembers your editing as a new context for the further conversation.

Let's say you want to create an overview for one of your projects, enter your prompt but the output would have to be revised again because key figures or information were entered differently or incorrectly. Simply edit and the LLM will continue to work with your customized information.

Structured expenditure

Sometimes a simple text is not enough. If you want your AI not only to talk, but also to spit out data in a certain format, then the Structured edition Your best friend. Whether JSON, YAML or Markdown tables, You can specifically instruct the AI to stick to a format.

This is super useful if you want to feed the AI output directly into other systems, such as a Telegram bot, a database or your development environment (IDE).

You simply give your model the instruction directly in the Prompt or in the Prompt system. For example:

Act like a financial analyst. Your output should be in this JSON format: {“Recommendation”: “string”, “ground”: "string"}

The AI will then no longer respond with a free text, but with a perfect JSON object that exactly matches your specifications.

But beware: Not every model is equally good at adhering to these strict rules. The ability, Valid JSON or to create other formats depends heavily on the model. So it's best to test a few models to find the one that works most reliably structured.

Configure LM Studio: Make it your own

LM Studio It's great, but you can adapt it to your needs. For those of you who don't want to read it all, here's a video for you:

More power in the chat: Branch & Folder

Sometimes you just need a fresh start without losing the previous course. Exactly for this there are ‘Branch’! This handy feature, found right below the chat, creates a copy of your ongoing conversation.

This is perfect, for example, if you have written a new paragraph for your presentation and now want to generate a prompt for an image AI from this last paragraph. Simply click on ‘Branch’, create the new prompt and copy the result into an external image creation tool. In the meantime, you can continue with the old chat history without overwriting anything.

Speaking of chats: LM Studio Save all your conversations in a clear menu on the left. Here you can create folders and sort your chats simply by drag-and-drop. So you also keep track of many projects.

Storage path for your conversations

On Mac/Linux you can find them here:

~/.lmstudio/conversations/On Windows, they're there: (Directories starting with a . you don't see by default. Uses therefore ‘Show hidden and system directories’)

%USER PROFILE%\.lmstudio\conversationsCommunity

With eyes and without censorship: Vision models & ‘Abliterated’ LLMs

You want to work not only with text, but also with images? No problem! LM Studio supported Vision models, which can process visual content. Just look for models with a yellow eye symbol, such as: Gemma 3 4B. You can attach images to these models in the chat and ask them to analyze the content or generate prompts for it.

If your stories or ideas get in the way of the ethical filters of normal AIs, there is also a solution: ‘Uncensored’ models. These LLMs have been modified by the community to have less stringent security barriers. You can often find them in the model search bar with the keyword Abliterated. But be warned: The creators point out that these models can produce sensitive, controversial or inappropriate content. So use it wisely!

The secret control center: Your System Prompt in LM Studio

Each powerful tool has a secret instructions for use. Large Language Models (LLMs) Prompt system. This is not a normal command, but an overriding instruction that determines the entire conversation and personality of your AI. If a user prompt is a single question, then the Prompt system is the ‘job profile’ that defines the AI for the entire session.

Why is this so important? For local models that are smaller and more specialized, the Prompt system is key. It gives them the direction they need to work accurately and reliably. You can use it to instruct the AI to adopt a specific character (such as a pirate), focus only on one topic, or even pronounce their thought steps aloud. This is exactly what makes it possible for smaller models to mimic the larger ones and achieve amazing results.

System Prompt vs. User Prompt: The distinction is crucial

Terms such as ‘prompt’ and ‘preset’ are often confused. But the distinction is simple and super important:

- The system prompt lays the Rules and Personality for the whole conversation. It is static and persists.

- The user prompt is the specific question or task, which you put to the AI in every single round. It is dynamic and constantly changing.

This system ensures that AI does not forget its role and provides consistent answers.

How to use the Prompt system in LM Studio

LM Studio makes it easy for you to use this power. At the top right corner is an inconspicuous wrench. Clicking on it will take you to the Content, Model and Prompt preferences. In the content panel you will find a special field in which you can enter the system prompt. This is your command center.

Step-by-step instructions: Your first system Prompt

- Load model: Go to the Discover tab (Lupensymbol) and load a model like Mistral 7B Instruct down. Then switch to the chat tab and load it there.

- Enter System Prompt: At the top right of the wrench in the corner, enter your instruction, for example: ‘You are an helpful assistant who always responds in the style of a haiku.“ or “Behave like a best-selling author editing a novel”

- Ask a question: Type a simple question into the input field below, e.g.: ‘What is a large language model?„

- See the result: The AI's answer will no longer be normal, but a haiku-style poem. So you can immediately see how much influence the system has Prompt!

Presets: Your toolbox for perfect prompts

Typing in a complicated system Prompt every time is tedious. That is why there are presets! A preset is a .preset.json-File that saves not only your system prompt, but also all other settings such as temperature or context length.

Once you've perfected a configuration, just click ‘Save Preset’. Give the preset a name (e.g. ‘Shakespearean Poet’) and you can reload it at any time with one click. This saves a lot of time and makes the work with the AI reproducible.

Professional tip: Prompt and model must fit together!

Most modern LLMs are trained to a certain format. If you use the wrong system Prompt, it can lead to nonsensical answers. The ‘instructions for use’ for each model can be found on its Hugging face model card. It shows exactly what the system should look like.

A well-known example is the DeepSeek Coder-Model series. These models expect a very specific system prompt. If you don't use it, the AI may not answer your programming questions because it's unsure without this guide. This shows how closely the quality of AI is related to the right Prompt system.

Frequently Asked Questions (FAQ)

- Why do I need the Prompt system? It sets the rules and personality for the entire conversation so that the AI stays consistent and does exactly what you expect it to do.

- Can I use a system prompt for all models? No. While general prompts often work, specialized models such as DeepSeek Coder A very specific prompt. Always check the HuggingFace page of the model first!

- My system Prompt does not work! What to do? Check if the prompt format matches the model. Another common mistake is the Inference parameters in the right panel. If: Prompt Eval Batch Size (n_batch) much smaller than Context Length (n_ctx) AI can ‘forget’ its own instructions. Try adjusting both values to get the best results.

For power users: The LM Studio command line (CLI)

Do you like to automate things and work directly in the terminal? Then it is lms-Command line just right for you. lms is the CLI tool that works with LM Studio It allows you to script and automate your workflows. This allows you to control your local LLMs directly from the terminal.

Important: Before you lms You can use it, you have to LM Studio Started at least once.

The facility is child's play:

- lms bootstappen: Open your terminal (or PowerShell on Windows) and type the following command

macOS/Linux:

~/.lmstudio/bin/lms bootstrapWindows:

cmd /c %USER PROFILE%/.lmstudio/bin/lms.exe bootstrapCheck installation: Starts new Terminal window and just give lms one. You should see a list of available commands.

The most important commands at a glance:

With these commands, you can use the basic functions of LM Studio Execute directly in the terminal:

- Control the server: Start or stop the local server to use your models via API.

lms server startlms server stop

- List models: Displays all models that you have on your computer or those that are currently loaded.

lms ls(lists all downloaded models)lms ps(lists the currently loaded models)

- Loading and unloading models: Loads a model into memory or removes it.

You can even set parameters such as GPU usage (--gpu=1.0) and the length of the context (--context-length=16384) directly.

lms load TheBloke/phi-2-GGUFlms unload --all(unloads all loaded models)

The lms CLI is a powerful tool for anyone who wants to delve deeper into the world of local LLMs. Try it out and automate your AI projects!

The API: Your local OpenAI clone

Do you not only want to use your local models in chat, but also integrate them directly into your own apps and projects? LM Studio makes it possible. The app provides an OpenAI-compatible API Ready, which feels like the real service, only that everything runs locally on your computer.

It's so easy to connect

The ingenious thing about it: You hardly need to customize your existing scripts or clients for OpenAI. Just change them Base URL your API requests so that they point to your local LM Studio server (http://localhost:1234/v1). Your code thinks it's communicating with OpenAI, but in reality everything stays on your computer.

Here are a few examples of what this looks like in different languages:

Python

from openai import OpenAI client = OpenAI( base_url="http://localhost:1234/v1" ) # The rest of your code stays the same.

TypeScript

import OpenAI from 'openai'; const client = new OpenAI({ baseUrl: "http://localhost:1234/v1" }); // ... the rest of your code remains the same ...

Typical languages for API connection

In addition to Python and TypeScript (JavaScript) especially these languages are often used for API connection because they have their own libraries for interacting with APIs:

- Java: With HTTP clients such as

OkHttporApacheHttpClientREST requests can be easily sent to the LM Studio API. There are also special OpenAI client libraries for Java that you can customize. - Go: The standard library of Go (

net/http) is perfect for making HTTP requests. It is a lean and efficient choice for backend applications. - C#: For .NET developers, the language with the

HttpClientthe ideal tools to connect to the API. Here, too, there are special packages that make it easier to connect to OpenAI-compatible services. - PHP: PHP is often used in web development. You can use them

cURLextensions or libraries such asGuzzleUse it to send API requests. - Ruby: Libraries such as

faradayor the integratednet/httpLibrary can be used to control the API of LM Studio. - rust: If you value speed and safety, use Rust. Libraries such as

reqwestmake API requests simple and secure.

The beauty of the OpenAI compatibility You are not limited to a specific language. As long as you can customize the base URL, the world of programming is open to you to interact with your local LLMs.

The main API endpoints

LM Studio Supports the most common OpenAI endpoints so you can work seamlessly:

GET /v1/models: Lists all models that are currently loaded in LM Studio. Perfect for making your projects dynamic.POST /v1/chat/completions: This is the main endpoint for the chat. You can pass your conversations as a chat history and get a response from the AI. Here the system prompts and all parameters (such astemperature) automatically applied.POST /v1/embeddings: Ideal for converting text into vectors. This is especially useful for RAG (Retrieval-Augmented Generation), i.e. chatting with your documents.POST /v1/completions: Even if OpenAI no longer actively supports this endpoint, you can use it in LM Studio use to generate the continuation of a text. Note that chat models may show unexpected behavior. Base models are best suited for this feature.

With the API of LM Studio You are not limited to the graphical interface, but can integrate the full power of your local AI directly into your own tools with the familiar OpenAI syntax.

Conclusion: Your AI adventure starts now!

You see? You are almost already LM StudioExperts! From downloading your first model to chatting with Llama, you've unleashed the power of local AI. LM Studio It's perfect for beginners because it doesn't require any programming skills, protects your data and gives you access to a huge range of models. Step by step, it also offers you really great advanced features and options.

What is your first project? A chatbot? A poetry generator? Or maybe you want to ask the AI to help with homework? No matter what, have fun!

Sources: LM Studio Masterclass | HuggingFace | Hot Guide AI | LMStudio Docs | LMStudio Community | LMS Discord | LMS Github | @lmstudio